Don’t Kill Innovation, But Apply Guardrails For AI

On March 29, 2023, over 1100 notable signatories signed the Open Letter from the Future of Life Institute asking for a moratorium on AI development. This wake-up call to society highlights a need for tech policy to catch up with technology and brings awareness to the pervasive impact of AI on society for decades to come. As one of the major investors in Open AI and most notable signatories, Elon Musk has been advocating for a pause. Per the letter, “Powerful AI systems should be developed only once we are confident that their effects will be positive, and their risks will be manageable.”

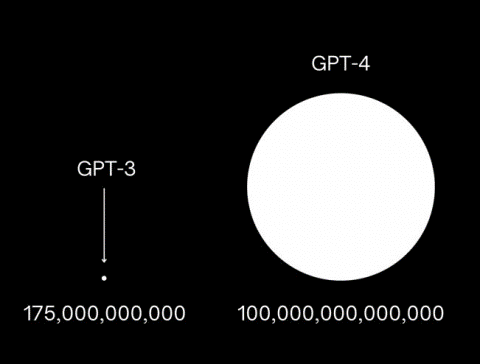

With OpenAI just releasing its next powerful LLM (Large Language Model) GPT-4, these AI experts and industry executives have suggested a 6-month pause on the development of any models more powerful than GPT-4, citing an AI apocalypse. To provide context on the size of the GPT-4 model compared to the current GPT-3 model, the current GPT-3 uses 175 billion parameters whereas the new GPT-4 uses 100 trillion parameters (see Figure 1).

Figure 1. Size of GPT-4 LLM vs GPT-3 LLM

Understand What Can Go Wrong With AI

- AI will fall into the wrong hands

Technologies can be used for good or for evil. That power lies in the hands of the user. For example, bad actors can create phishing emails that are personalized to each individual which would sound so legitimate that you can’t resist clicking on it -- only to compromise your system by exposing it to malware.

Other examples include scammers using voice cloning the grandson of an elderly couple to trick them to send money claiming he was in jail and needed bail money. The scary part was that the scammers used just a few spoken sentences from a YouTube video to clone his voice almost perfectly.

- Humans find it harder to distinguish between originals vs fakes

Programs such as MidJourney can create deceptive, realistic, yet fake images. There are samples on the internet floating around, from Pope wearing a funky puffer jacket to Donald Trump getting arrested and manhandled by police to a realistic Tom Cruise fake video (from a few years ago.) While even the educated, sophisticated mind has a problem grasping and segregating the real content from fake, less-educated masses will believe anything they see on the Internet or on TV. And many radical groups on both sides of the political spectrum started to use this as a starter to create dangerous propaganda that could lead to undesirable results. Governments could be toppled, a political game could be played, and the masses can be convinced as AI can help create fake news on a massive scale.

One thing that can be forced on AI companies is to provide an option to do content verification using cryptographical or other strong trustworthy methods. This can potentially provide an option to differentiate fake from real. If they can't provide that, then they shouldn't be allowed to produce that content in the first place. Content authenticity will remain a challenge as AI proliferates disinformation at an exponential scale

- A six month voluntary unenforceable moratorium will do little to halt progress in AI

The moratorium proposed by Musk and others is calling for a 6-month pause. Not sure what the arbitrary period will do exactly. First of all, is the pause and/or ban only for US-based companies or is it applicable worldwide? If it is worldwide, who is going to enforce it? If not enforced properly and worldwide, forcing US companies to abandon their efforts for the next 6 months will help other nations get much ahead in the AI arms race.

What happens after 6 months? Would regulatory bodies have caught up by then?

Interestingly enough, asking to pause AI experiments is like polluting factories calling for a pause on emission regulation and continuing to pollute because they can’t properly measure or mitigate their pollution or face the shutdown risk. A pause is not going to make government or regulatory bodies move any faster to solve this issue. This is the equivalent of “kicking the can down the road”.

Apply Guardrails For AI Ethics And Policy

- Deploy risk mitigation measures for AI

At this point, OpenAI and other vendors are offering the LLM as a "use it at your own risk" mode. While ChatGPT has some basic guardrails and safety measures in answering specific questions and topics, it has been jailbroken by many, which leads to unpleasant answers and behaviors (such as falling in love with a NY Times reporter or accelerating the decision of a married father to commit suicide, etc.) Enterprises that want to use AI need to understand the risks associated and have a plan to mitigate them. By using this in real business use cases, if your business gets hurt, they will take no responsibility, and you will be on your own. Are you ready to accept that risk?

Conduct a business risk assessment of AI usage in specific usecases, implement proper security, and have humans in the loop making the actual decisions with AI in helping mode. More importantly, it needs to be field tested before it can go into production – extensive tests proving human-produced results will be the same as AI-produced results, every single time. Make sure there are no biases in the data or the decision-making process. Be sure to capture the data snapshots, models in productions, data provenance, and decisions for auditing purposes. Invest in explainable AI to provide a clear explanation of why a decision was taken by AI.

Although generative AI can help create realistic-looking documents, marketing brochures, content, or writing code, etc. humans should spend time reviewing the content for accuracy and bias. In other words, instead of trusting AI completely, it should be used to augment any work, if at all with strong guardrails on what is accepted and expected.

There is also a major security risk in using LLMs are they are not properly secured as of today. The current security systems are not ready to handle the newer AI solutions yet. There is a strong possibility of IP information leaking by simple attacks over LLMs which is proven by research students as many of these systems have weak security protocols in place.

- Use ChatGPT and other LLMs with caution

Keep in mind most LLMs, including ChatGPT, are still in beta. And they not only use the material provided to it, but store it in its database, and retrain their model using that data. Unless specific policies protect employees from using it, they could get lazy and use them but leak confidential information. In a classic case, Samsung employees used ChatGPT to fix their faulty code and asked to scribe a meeting which turned out to be a colossal mistake. ChatGPT is now exposing Samsung’s confidential data such as semiconductor equipment measurement data, and product yield information. Employees should be provided guidelines immediately on what is an acceptable use of ChatGPT and other LLMs.

- Understand chatGPT is not a thinker or decision intelligence system

People assume that chatGPT and other LLMs can understand how the world automatically and make decisions that will end the human world. They tend to forget it is merely a large language model trained on the entire world’s data that is publicly available. This means if it hadn’t happened before, or written before, they can’t give you information without context unless human brains can make subjective decisions. Even with that information it is very error prone in the current iteration. It is humans that will use those systems that will use it for either good or bad.

Bottom line: Don’t kill Innovation

How society deploys guard rails for AI should not be about stopping a certain technology, or company, unless these companies really go rogue. There was a similar outcry when IoT initially became popular about personal data collection as well but wearing Fitbits and sharing the data in self-quantified technologies seem to be very common and accepted practice now with the right privacy policies and permission requests.

Policy makers, technology companies, and ethicists should move the focus to work on guardrails within which these systems. The focus should be on regulations, security, oversight, and governance. Define what is acceptable and what is not. Define how much of the decisions can be automated versus human involvement. At the end of the day, AI and analytics are here to stay. Just pausing it for 6 months is not going to let the safeguard measures catch up.

0 Commentaires